Like most who entered college in the mid-aughts, I was taken by Facebook. It had a sleek interface. All my friends from high school and college were on it, being super cool with edgy profile pics. I browsed my newsfeed everyday — probably for years. But, starting the early 2010s, I noticed an inverse correlation. The more friend requests I received from long lost aunties and uncles, the fewer posts I would see from my peers. It was almost like the aunties and uncles were driving them away.

There’s a concept in social psychology known as an illusory correlation 1. Illusory correlations are sort of like stereotypes for relationships between two variables — if enough of a certain type of person does activity X or uses thing Y, then other people start to associate activity X or thing Y as being only for that certain type of person. More concretely, if enough aunties and uncles seem to use Facebook (relative to other demographics), then non-aunties-and-uncles start thinking that Facebook must only be for aunties and uncle. At best, the correlation is silly; at worst, it is pernicious. Whatever it is, its effects are often unconscious and real.

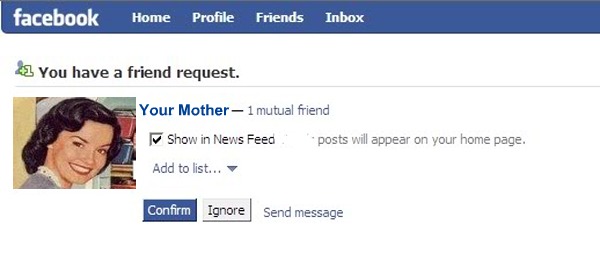

Now, I want you to close your eyes and think about the first person who comes to mind when you think about the attribute “security conscious” or “concerned about privacy”. Readers of this blog may be biased; but, for many non-experts, the person they think about may look something like this:

And therein lies the illusory correlation in consumer security.

If you’ll allow me a moment to be more formal: early adopters of security and privacy features are usually those who are especially concerned about security and privacy. Perhaps this is because they genuinely have some sensitive information to keep away from prying eyes. Perhaps they are simply risk averse. Whatever the reason, many non-experts describe these early adopters and security experts more generally as “nutty” or “paranoid”. A classic example of this comes from Shirley Gaw and colleagues study on encrypted email usage in the workplace 2. The authors found that if someone used encrypted email but did not have a “good” reason for doing so (e.g., if they were only asking about lunch), they were perceived by their colleagues as being “paranoid.” I found something similar in my own research 3: experts felt hesitant about sharing security advice with non-experts to avoid being perceived as “nutty”. The upshot? If only “nutty” or “paranoid” people use security features, and I am not nutty or paranoid…well, those security or privacy features must not be for me.

In short, early adopters of security features may — subtly or overtly — be perceived as paranoid by others; in turn, this stigma can cause non-experts to “disaffiliate” from security. This is what I call the paranoia-disaffiliation hypothesis in end-user security. I call it a hypothesis because it has not been tested it in a randomized, controlled experiment 4; but, there is a good bit of observational evidence to back it up.

When I worked at Facebook, for example, I ran an analysis of how one’s friends use of security features like two-factor authentication affected one’s own use of two-factor authentication 5. I found a surprising effect: for the vast majority of people, social influence appeared to have a negative effect on one’s own adoption of two-factor authentication. In other words, for the vast majority of people, the presence of N friends who used two-factor authentication made them less likely to use two-factor authentication than if they had fewer than N friends who used two-factor authentication.

This is a surprising result. Typically, social “proof” tends to have a positive effect — if more of my friends use X, I should be more likely to use X myself. But, my research suggests that this more “typical” effect of social influence does not come to fruition for typical security tools until many of one’s friends start using security tools (e.g., as demonstrated by the recent WhatsApp exodus to Signal). Social influence for security behaviors is sort of like quantum mechanics in physics: the rules that apply at large scale do not apply at small scale.

So what can we do? My research suggests that one of the key drivers of the paranoia-disaffiliation hypothesis is the unfounded assumption that only geeks use security features. This image of the shady security geek is pernicious, and has been amplified by popular depictions of “hackers”. We also happen to live in a culture that values openness and transparency — ”I’ve Got Nothing to Hide”, tired as it is as an argument against privacy, is seen as a badge of honor. But this notion of privacy and security being only for shady geeks is dangerous, and does more harm than good.

There is hope, though. My research suggests that the paranoia-disaffiliation hypothesis does not hold for security and privacy systems that are designed to be more social: i.e., those that can be easily observed when used, that involve others in the process of providing security, or that allow us to act in benefit of others. By making security and privacy more social, we start to associate security and privacy with more desirable social properties — e.g., altruism, leadership, and responsibility. And if people stop viewing the security conscious as shady geeks, and start viewing them as altruistic leaders, perhaps we can overcome the paranoia-disaffiliation effect.

Thanks for reading! If you think you or your company could benefit from my expertise, I’d be remiss if I didn’t alert you to the fact that I am an independent consultant and accepting new clients. My expertise spans UX, human-centered cybersecurity and privacy, and data science.

If you read this and thought: “whoah, definitely want to be spammed by that guy”, there are three ways to do it:

- Subscribe to my mailing list (spam frequency: a couple of times a month.)

- Follow me on Twitter (spam frequency: weekly)

- Subscribe to my YouTube channel (spam frequency: ¯\_(ツ)_/¯)

You also can do none of these things, and we will all be fine.

-

Hamilton, David L. “Illusory correlation as a basis for stereotyping.” Cognitive processes in stereotyping and intergroup behavior (1981): 115-144. ↩

-

Gaw, S., Felten, E. W., & Fernandez-Kelly, P. (2006, April). Secrecy, flagging, and paranoia: adoption criteria in encrypted email. In Proceedings of the SIGCHI conference on human factors in computing systems (pp. 591-600). ↩

-

Das, S., Kim, T. H. J., Dabbish, L. A., & Hong, J. I. (2014). The effect of social influence on security sensitivity. In 10th Symposium On Usable Privacy and Security ({SOUPS} 2014) (pp. 143-157). ↩

-

But if you are a researcher and are interested in formally testing this hypothesis, please reach out! I’d love to collaborate. ↩

-

Das, S., Kramer, A. D., Dabbish, L. A., & Hong, J. I. (2015, February). The role of social influence in security feature adoption. In Proceedings of the 18th ACM conference on computer supported cooperative work & social computing (pp. 1416-1426). ↩